Vision-RTK 2: precise positioning under all conditions

- Real-time positioning enabled by sensor fusion

- Leading performance in GNSS-degraded areas

- Fast system integration and easy deployment

Expands operational capability

Best-in-class positioning, enabled by sensor fusion technology

Follow the blue line to witness the Vision-RTK 2’s reliable positioning in GNSS degraded areas.

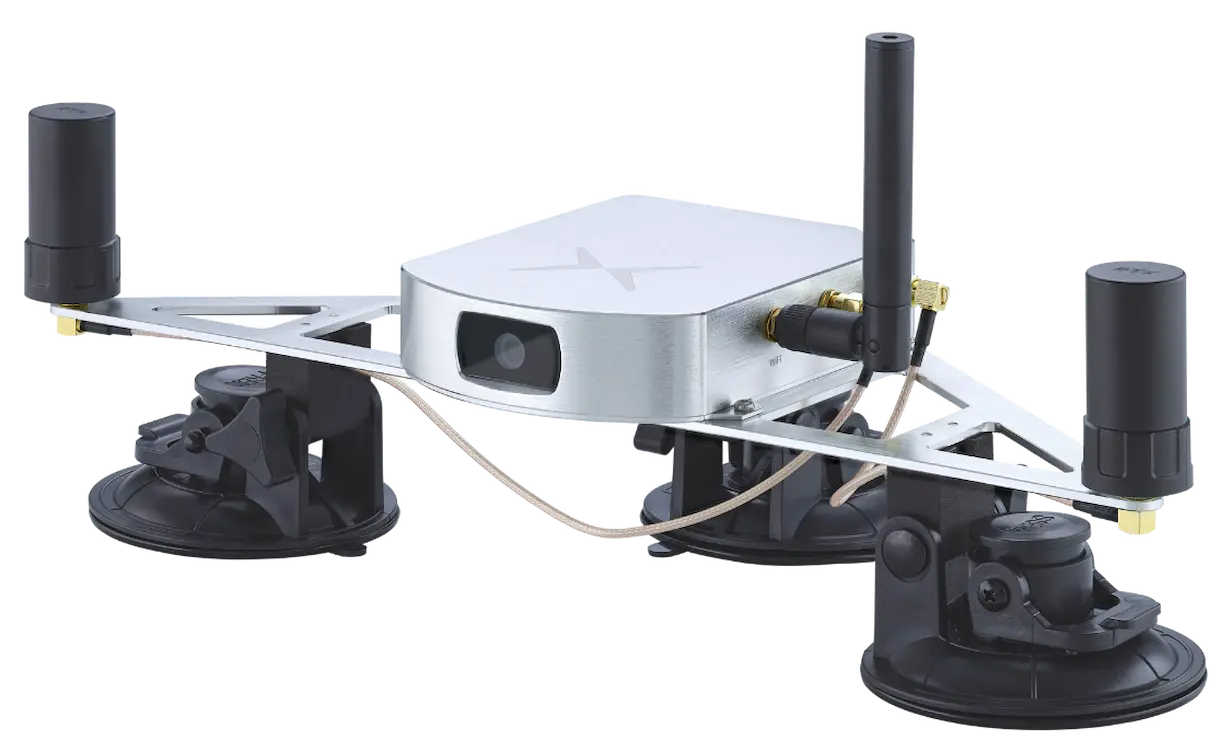

Sensor fusion of GNSS, IMU and visual odometry for precise global positioning

Vision-RTK 2's fusion engine leverages the strength of multiple independent sensor technologies and compensates for individual weaknesses to provide precise positioning everywhere.

OUR SOLUTION

Vision-RTK 2: Deep sensor fusion technology

Reliable performance

Precise positioning under all conditions

This is some text inside of a div block.

Evaluate our solution

Get our ready-to-deploy Starter Kit for testing and evaluation

Benchmark performance for your use case

5-minute setup, with comprehensive documentation

Out-of-the-box solution to get started with ease

Including two 60-minute support calls with application engineer

Ready to use VISION-RTK 2 Starter Kit

Fixposition Distributors

Find the Vision-RTK 2 in your area

ARGO: Japan

Go to shop: https://www.argocorp.com

MYBOTSHOP: Europe

Go to shop: www.mybotshop.de

Movella: International

Go to shop: www.movella.com

T-Max: China

Go to shop: www.t-maxtech.com

InDro Robotics : North America

Go to shop: https://indrorobotics.ca/

FAQ

Frequently asked Questions

Where can I find Fixposition’s GNSS transformation library and information of ROS driver?

If I select Fixposition’s solution, what journey can I expect?

Evaluation stage

We will work with you to study your application, hardware, software platform, and specific requirements. You can use your Starter Kit to evaluate our positioning sensor in your application environment. We will provide you with a platform to upload your data to be reviewed by our engineers and help us support you.

Design-in stage

Our off-the-shelf solution is plug-and-play, thanks to our compliance with industry-standard interfaces and protocols. We can adapt our hardware and software to your application requirements for high-volume sales. Once our sensor is integrated, we will work with you to optimize performance via fine-tuning.

After-sales support

After your solution goes into production, we offer continued software updates to ensure your product is up to date. As your product matures, we would be pleased to work with you to support feature requests and your evolving needs.

What is the difference between Vision-RTK 2 and other positioning products?

Vision-RTK 2 combines the best of global positioning (enabled by GNSS) and relative positioning (VIO).

We have developed state-of-the-art sensor fusion technology to overcome weaknesses in individual sensors and provide high-precision position information in all environments. Our technology removes the time-dependent drift characteristics that are typical of solutions that solely rely on inertial measurement units (IMU) for dead reckoning.

We have developed a unique technique that delivers a more robust and precise solution than the typical Kalman filter-based approaches used by other solutions on the market.

Our solution is easily integrable into a variety of robots with the ability to take further sensors as input as they become available.

In what type of applications have your customers deployed the Vision-RTK 2?

All forms of autonomous solutions including:

- Autonomous shuttles.

- Small/medium-sized robots used for delivery, patrolling, rescue, and cleaning.

- Robot lawnmowers.

- Agricultural robots such as tractors, harvesters, planters, and sprayers.

- Small high-volume agricultural robots.

Beyond the Vision-RTK 2 sensor, what else do I need?

- An internet connection.

- A third-party RTK correction data subscription (NTRIP) (request more details about our bundle options with Topcon by sending an email to sales@fixposition.com)

- Optionally: wheel tick sensor input.

Request your Starter Kit now

- Plug and play solution: Fully configured and calibrated to start evaluation immediately

- All inclusive: Includes antennas, cables ,and batteries needed for full operation

- Open source ROS 1/ROS 2 drivers for easy integration and adaption to your system

Instantaneous heading estimate

Adds redundancy

Global position

Velocity

Orientation

GNSS observations

Camera images

IMU measurements

Wheel speed

Feature tracking

IMU’s distance-dependent drift